File: gg.jpg 📥︎ (987.4 KB, 1080x1080) e04f6c2f65ac7f8c6a50ccf48783246eb9c9d934d2e41e9c38d3c790ca7343590ImgOps

№4861[Quote]

'risu on the 'log

№4873[Quote]

Fuck off.

№4875[Quote]

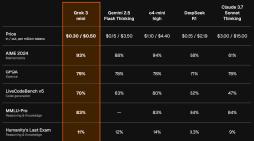

nu-oai model are raisin

still waiting for china to make agi

№4893[Quote]

>>4858 (OP)fuck off with your tranime

№4902[Quote]

>>4893never forget where you came from.

№4908[Quote]

we're so back!!!

№4924[Quote]

home is back

№4970[Quote]

>>4858 (OP)WARNING: PEDO GENERAL

№5151[Quote]

>>4858 (OP)Holy raisin, didn't expect /lmg/ to come back before 4chan did.

Now I can get back to my regular schedule.

Qwen3 when?

№5156[Quote]

>>4858 (OP)Thanks for making the general.

>>5151As long as they fare well against the new mini OpenAI models, probably as soon as possible.

№5297[Quote]

>>4858 (OP)>Microsoft releases Bitnet B1.58Use case?

№5380[Quote]

Good to see this thread made it over here. I don't visit often but what's the current meta for LLMs?

Any way to distribute a model across GPUs with various vram capacities? I've got two Tesla M40s, one is 24gb and one 12gb.

№5383[Quote]

>>5297There isn't really one at present, bitnet is still decidedly in the research and proof-of-concept stage.

>>5380Really depends on usecase and what type of model you're aiming to run - People are generally using llama.cpp or koboldcpp as their backends, but I'm using tabbyAPI because it runs on ExLlama which is way faster for things that live entirely in GPU memory

>Any way to distribute a model across GPUs with various vram capacities?Pretty much all current backends have some form of multi-GPU support, but if I recall correctly Tesla M40's are too old an architecture to support tensor parallelism or NVLINK, so they have some noticeable slowdown and overhead when being pooled.

№5386[Quote]

>>4858 (OP)kept the THREAD ALIVE ARYAN BABY

№5387[Quote]

>>4858 (OP)here, samefagging doe.

do you know about a vidya called MyRobot? is able to use LLM like the nvidia GPU and other stuff also support AI voice generation.

№5416[Quote]

>>5387>MyRobotIt seems pretty cool but Skyrim (VR) kind of already have the whole framework for interacting with LLM NPCs setup

> VR support > Massive pool of mods > Voice Generation tools available > Integration of world environment (including the game's scripting system) with an LLM: Mandella or CHIM №5436[Quote]

>>5416The game does something like that too but in a different spin, the robots can be personalized with many combinations and the main part is being able to have stable conversations without nonsensical stuff.

Also it allows using already existing AI like deepseek which their logical model is very advanced.

№5447[Quote]

>>4858 (OP)Sams o3 and o4 mini are amazing. Local lost.

№5451[Quote]

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

NIGGER SLAVE GENERAL

raisin EATING PAJEET GENERAL

№5454[Quote]

>>5451sir please be wholesome bloody bastard dalit

№5462[Quote]

>>5461I don't cry like this and my ram isn't rgb

№5508[Quote]

>>5465r2 in 2 more weeks, trust the plan

№5575[Quote]

>>4858 (OP)stfu AI pajeet

№5583[Quote]

>>5465DeepSikh was always coal.

№5656[Quote]

>>>5416 (You)>The game does something like that too but in a different spin, the robots can be personalized with many combinations and the main part is being able to have stable conversations without nonsensical stuff.>Also it allows using already existing AI like deepseek which their logical model is very advanced.I would expect from any game which is serious about AI integration (and I am of course not referring to generic neural network tricks like superresolution) the option to call upon any local LLM

>>5465It's pretty tied with R1 on my internal benchmark. as far as I am aware OAI uses Q# internally, if the results are currently tied, R1 + R* would beat o4 high, no reason for me to switch ;P

№5730[Quote]

>>4894China will manage. US AI companies are ngmi, because they know what they are doing isn't the way to ever achieve AGI. Its one big lie to keep the money rolling it.

Even with the GPU handicap they dealt to China, they will still win. Because China doesn't give a fuck.

№5880[Quote]

>>5854>The US starts building a great firewall around China from the Outside.Kek, it's amazing just how butthurt deepseek has made saltman and his fellow kikes in the us gov.

№5926[Quote]

File: peterson.png 📥︎ (71.57 KB, 255x207) f47c62495a821fd39c93c43eab5a4a6c78d0fe843d8b05fba37b6241728fa2850ImgOps

>>5854>blocking open source tech because you cant get your cut or soemthingameriniggers always trying to slow down progress because of their selfishness

№5949[Quote]

>>5854>>5880>>5926Much ado about nothing. The US lacks the legal and technical framework to prevent determined end users from using Deepseek. The best they might be able to do is enforce non-use on corpos.

№6027[Quote]

>>5949I'm not concerned about them actually stopping me from using Deepseek - I'm not even american.

But the precedent it sets is concerning, especially since huggingface is based in the US and that's basically where local LLM/ML lives in its entirety - and them getting all chinese stuff or whatever upsets corpos nuked would suck hardcore and set us all back.

№6045[Quote]

Seriously though, why is this thread here instead of 8kun? We're moments away from getting spammed by jaks and nikado asses on here.

№6052[Quote]

>>6045Last time I went to that place it was deader than my boner after trying to ERP with Gemma

№6058[Quote]

>>6052Currently it has about the same posts per hour as this site's /pol/ only 0% of its posts are spammed jaks and cobs with continually indented quotes.

/sp/ appears to have moved a bunch of their generals to 8kun's /comfy/, and a few others are setting up in /random/ and /pol/ because they're more active boards.

I think roughly half the userbase is 4chan refugees right now, and the other half are just quietly ignoring us and sitting in /qresearch/

№6248[Quote]

bump

№6249[Quote]

qwen3 when?

№6252[Quote]

>>6249Integration of Qwen3 into vllm is already being worked on, should release soon

https://github.com/vllm-project/vllm/pull/15289>>5949There are absolute scumlords like Josh Hawley who want you to go to jail for 20 years for downloading Deepseek R1

https://www.fox29.com/news/deepseek-ban-senate-billSo >some< are trying to make it legal to hunt down… free people

№6253[Quote]

>>62492 more weeks.

The real question is whether it'll come out before 4chan is back.

>>6252>implementation of the Qwen3 and Qwen3MoE model.Oh neat, so we're getting dense and MoE. both GPUmaxxers and Rammaxxers will have something to chew on.

№6268[Quote]

>>6253>2 more weeks.>The real question is whether it'll come out before 4chan is back.4cuck is finished unless they use vichan

№6423[Quote]

>>6253> Oh neat, so we're getting dense and MoE. both GPUmaxxers and Rammaxxers will have something to chew on.Rammaxxer here, I hope they give you the option between multiple sizes 7B/32B/700B something like that but I expect there will only be distills from the biggest model

№6486[Quote]

>>6423One of the Qwen devs mentioned the reason this particular release was taking so long was the wide variety of model sizes, and wondering if they should ditch them for the next releases, so you may be in luck here, unlike with llama4's dograisin which would have been a problem even if the models didn't suck.

№6866[Quote]

File: 574846.jpeg 📥︎ (157.64 KB, 2048x1131) 19f8107fef80ef036a0f2fef6a67ee07ef626e67a0070176178ad508d50095d80ImgOps

local 32b o3 mini when? Surely Zuck has something planned. Maybe Altman or Elon will open source it.

№6923[Quote]

>>4858 (OP)this place won't beat 4chan, it's raisintier.

№6944[Quote]

>>6807

Let's fucking gooooo

№7069[Quote]

>>6866Altcuck promised to release an open source model, still waiting on that.

I bet he was busy sucking off the Trump administration's dick, he really want the competition dead

№7131[Quote]

>>5151this

/lmg/ and webm with sound. this is new /g/ now.

№7201[Quote]

what's a raisin?

№7212[Quote]

>>7151>first image i see, Legitimately how? I had to look for that post for like 2 straight minutes because it's from 8 YEARS AGO.

It's so far down I can't even see it in the catalog a 4k resolution, how the fuck was that the first thing you saw rather than lmg or the ai art thread which are at the top of the board?

>>7201It's the gay sharty wordfilter for SHlT

№7246[Quote]

>>7212>howchange your list order

№7309[Quote]

>>7132

promote your tranny board somewhere else pedo

№7325[Quote]

>>7132

>8kun pedo shithole

№7400[Quote]

File: Qwen2.5 Omni.png 📥︎ (706.39 KB, 2180x1214) 49231f83007ebab1be836176febc4b0f3d72b4f9b48cbce149e1431c0fc18b830ImgOps

Given that threads here last much longer, some news updates:

- TensorRT-LLM: LLAMA 4, Phi‑4‑MM are now supported

- Transformers: Adds support for Qwen2.5 Omni (a model with speech, text, image and video support)

- Deepseek about to release their internal inference engine for Deepseek R1 and Deepseek V3

№7418[Quote]

>>7246To friggin what? If I list by bump, creation, or reply count it's still at the bottom, because again, it's a dead thread from 8 years ago.

>>7309>>7325You're thinking of 8chan.moe, samefag. 8kun banned loli which was the impetus for creating .moe, and why .moe is full of degen boards like /abdl/ and raisin.

But by all means stay here where board culture is spamming gifs of obese men's assholes - stay in your element.

№7508[Quote]

>>5465Holy Raisin, look at that coding jump. This has got to be benchmaxxed, right? It can't be THAT good.

№7525[Quote]

>>6249At this rate, we might get R2 first

>>6252>deepseek becomes the new 'p №7671[Quote]

>>7491Gem for coding assistance

№7752[Quote]

Where is Petra, wasn't that her homeboard?

№7755[Quote]

>There's some schizo talking to himself about someone nobody's ever heard of in two different /lmg/ threads on completely different sites

I don't remember us having a resident schizo other than the blacked miku guy, the fuck is this?

№7783[Quote]

>>7755This is 4th LMG thread.

№7788[Quote]

>>5465<unironically believing those fake benchmarksthe people who create these problem banks don't know any math and bloat it with highschool olympiad trash. That's why a random problem from a Springer Graduate Text math book makes even o3 raisin its pants. Try it out yourself on arena llm

№7906[Quote]

>>>5465

><unironically believing those fake benchmarks

>the people who create these problem banks don't know any math and bloat it with highschool olympiad trash. That's why a random problem from a Springer Graduate Text math book makes even o3 raisin its pants. Try it out yourself on arena llm

This is pretty consistent with my own observations.

Even asking any LLM to transform some non trivial Nondeterministic Finite Automata (one that isn't already deterministic ofc!) into an Deterministic Finite Automata or vice versa or making a regex out of it and giving some examples for accepted words is beyond the capability of current AI, including OpenAIs latest slop - tested it

№7913[Quote]

>>7906Have you ever had them try to prove something? I just tried o3 and o4 mini, and they both insisted that the product of two separable topological spaces need not be separable, which is retarded. Only after I proved to them that the product is always separable did it stop insisting that, just telling them it's wrong didn't help.

№8005[Quote]

>>7913>>7906>>7788I have a feeling we're starting to hit a hard ceiling for LLMs and have to look elsewhere for reasoning capabilities

№8076[Quote]

>>7913I am not surprised because yeah proofs like this are part of my standard set of questions for LLMs. Starting with relatively simple stuff. there is a simple proof to show that for n e Z, n^2 is even implies n is even. Most LLMs still insist on a direct proof (which leads to a circular arguments in this case) while you got to use a proof by contraposition .

Some LLMs start to cope and argue, some say sorry and thank me for giving the hint only to make it wrong another time and some… actually get it right =)

№8090[Quote]

>>7913>Some LLMs start to cope and argue, some say sorry and thank me for giving the hint only to make it wrong another time and some… actually get it right =)that happens a lot to me too. The best results I've had so far were when I gave manus a giant textbook and other supplementary material and ask it to solve the problems of a specific section

№8171[Quote]

>>8090Manus? Not open source. How does it work, are they vectorizing that Textbook input to use RAG? Who knows…

MCTS/R*/Ensemble voting and such do improve performance quite a but as well at the cost of high computational cost.

I would actually love to test LLADAs capability in mathematical domains soonish, I can imagine that that type of NN can perform certain "planning" tasks like proofs a bit better… possibly

№8322[Quote]

>>5508what realistically stops them from distilling o3 and o4

№8472[Quote]

Test 1 2

№8884[Quote]

>>8322Does OpenAI even expose the <think> tags now?

№9013[Quote]

>>8911Any troon bluesky bio would work I guess

№9068[Quote]

File: ?=333nXt5%.png 📥︎ (31.84 KB, 465x419) c34657bbfdc0ed46326e0c6677f2f1e0114f8431ea71d544679e8072d8476aac0ImgOps

>>4858 (OP)>>4858 (OP)It seems like nothinghappens is happening over easter and the interregnum between Deepseek R1 and the soon to be released Qwen 3, so I am recapping my personal all time favorite models:

Historical

> GPT-J, OPT Old but gold. Waiting for the next token to be shown, sentences

to be pieced together part by part… The curiosity of having semi coherent discussions with my graphics card is something I will never forget.

> Pygmalion Makes me pretty nostalgic, the novelity of it all back then was something to remember.

> LLAMA 1 Really started the whole finetuning scene. Alpaca made conversations much more coherent.

> LLAMA 2 Quite a lot better than Llama 1 but most importantly, spawned a wide variety of finetunes including all time favorites like Mythomax.

> Mistral v0.1 Solid base model with a lot of finetunes like OpenHermes2 or Neuralchat.

> Mixtral First time Open Source ever came close to proprietary performance. Great model for its time. The team behind it continues to remain relevant, albeit not in the top tier.

Also, for me, it was the first local model to be actually useful for some coding assistance.

> LLAMA 3 Lame base model but the upgraded versions were better. Spawned a number of okayish finetunes or something.

> Mistral Nemo Good model for RP, retarded for other purposes. I use it to drive Skyrim NPCs in the form of Nemo uncensored.

> Gemma 2 Decent model for coding and general knowledge but superseeded by Gemma 3 and Qwen 2.5 x R1 / QwQ

> Qwen 2.5 R1 Distilled / QwQ My current daily drivers for coding assistance. The sweetspot between size and performance.

> Deepseek R1 The big one, the king, nothing else to add currently. It's really shines in every category but all "intelligent" systems have their limits and so do current frontier models.

I mostly use it to discuss more complex engineering processes where I really need the breath and depth of knowledge in that large network.

> LLAMA 4 Its future shines as bright as 4cucks.

Some chuds also appreciated "Frankenmerges" although I have no personal experience with them.

Along the way we had many more or less important upgrades regarding samplers, optimization, training procedures, data curation, GUIs, …

and a whole bunch of inference frameworks (llama.cpp, transformers got plenty of upgrades, ktransformers, vllm, TensorRT,…) along the way!

Qwen 3, it's your turn now

№9269[Quote]

>>9068I think deepseek-v3-0324 is better than R1 for anything non-technical.

And yeah, the staying power of Nemo in unreal. It will always be a gem, even if its days are likely numbered with the approach of whatever succeeds QWQ/gemma3.

№9510[Quote]

I'm new to LLMs, and someone told me about huggingface to get GUFF models from, which seem to work on a base level. But whenever I ask any model about the harms caused by letting niggers and jews live, it refuses. Are there better places to get models from that aren't touched by Mossad?

№9513[Quote]

>>9510Use a uncensored model and good system prompt

№9516[Quote]

>>9510https://huggingface.co/spaces/DontPlanToEnd/UGI-LeaderboardTake a look at the UGI (Uncensored General Intelligence) Score and choose.

Note that base models (pure text completion) are most commonly completely uncensored. The censorship is applied when instruct finetuning

№9719[Quote]

Whats the latest meta on GUIs? I am personally using mostly the Llama CLI but I would love to have something more immersive. Used to use WebUI Text but the dev is retarded, to a degree and SillyTavern is written in javascript which I have no experience with

№9910[Quote]

llamacpp has web ui but for rp or if your using an api siliytavren is the best

№10026[Quote]

>>10016Call me when it's done at a model with an actually useful parameter count.

Until they attempt bitnet with a model around 12B+, they're really just little research toys.

№10142[Quote]

What's a good LLM for 24gb VRAM? Something smart and good at following the character.

№10598[Quote]

Qwen never ever

№10606[Quote]

>>10026qwen promised bitnet models

№10620[Quote]

>>10598Did they actually promise it this month or did we just speculate that it would be this month?

№10762[Quote]

>>10142Try QwQ or Gemma 3 27B